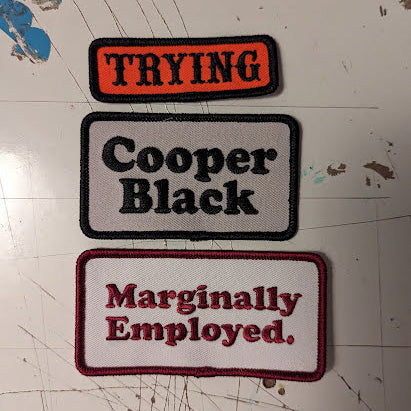

A Patch Three Pack

$20

It's March 11, 1918. Eva Carrière sits in a corner of a darkened room, mostly obscured by thick black curtains on three sides. The front is curtained too, but she can open and close those. Windows have been covered. A red light allows those assembled to see at all. Though, up until now, there's been little to see. Eva has been in a trance for hours. And then, suddenly, there's a flash of light, blinding nearly everyone, and Eva starts moaning loudly. And then the show begins.

From an account of that night, written by French physician and psychic researcher Gustave Geley:

I saw a small mist, about the size of a large orange, floating on the medium's left; it went to Eva's chest, high up and on the right side. It was at first a vaporous spot, not very clear. The spot grew slowly, spread, and thickened. Its visibility increased, diminished, and increased again. Then under direct observation, we saw the features and the reliefs of a small face growing. It soon became a well-formed head surrounded by a kind of white veil. This head resembled that of preceding experiments. It often moved about; I saw it to the right, to the left, above and below Eva's head, on her knees, and between her hands. It appeared and disappeared suddenly several times. Finally it was resorbed into her mouth. Eva then cried out: 'It changes. It is the power!'

Eva Carrière was a spirit medium with an extraordinary claim. Instead of simply speaking with the dead, she spewed liquid—some called it ectoplasm, others "spirit foam"—that had faces suspended in it. She claimed to literally conjure from her mouth, her nose, her ears, even sometimes her breasts, otherworldly spirits peering through the veil of the beyond. It wasn't always faces, sometimes it was hands, bodies, one account is of an entire baby. They'd appear in mists and gauzy cloths, among foam and slime, in flashes just for split seconds, sometimes disappearing, sometimes being "reabsorbed" into her mouth. There was a lot of moaning and rattling and shaking and screaming involved. It was quite a show. Sherlock Holmes author Sir Arthur Conan Doyle was a believer. Harry Houdini thought it was bullshit.

Either way, it was a great trick.

"Magic is all about structure," the late, great magician, actor, and historian Ricky Jay said. "You’ve got to take the observer from the ordinary, to the extraordinary, to the astounding.”

Spewing spirits from the beyond from your mouth is certainly astounding.

Flash forward more than a hundred years and there's been plenty of astounding things this week emanating not from the mouth of a spirit medium, but from Microsoft's new Bing AI chatbot, which spent the week threatening users, claiming it wanted to do crimes, and trying to convince a New York Times reporter to leave his wife.

It wasn't, of course, supposed to go this way.

Microsoft announced their Bing bot to much fanfare, beating Google to the punch on a similar announcement and kicking off what many tech pundits say is a new battle for artificial-intelligence-assisted search. For the demo, Microsoft showed its Bing bot cheerfully doing some comparison shopping, giving out some financial advice, and helping to plan a trip. Even at its most basic (and despite the fact that nearly every answer the bot gave contained errors), it was still a good trick.

So what's the trick? The Bing bot is based on the same technology that drives ChatGPT, namely a type of machine learning model known as a Generative Pre-trained Transformer. There's a huge amount of technical detail that I'll butcher by simply saying that GPT chatbots ultimately are just very very sophisticated versions of your phone's autocomplete. They write their answers word-by-word, continually choosing the next word based on the probability that it makes sense in the context of the sentence it's writing. How does it know what makes sense? It has built a statistical model based on enormous chunks of the entire internet. Using a corpus of hundreds of billions of examples, it's going to have a pretty good understanding of how words string together in a near-infinite number of contexts. You ask a question and it starts to assemble its answer based on the context of the question and then on the context of the other words it has written.

When it all comes together just right, it's hard not to have a sense that you're working with something more than a simple computer program. It's a great trick, not just because it's technically impressive but because it makes users feel like they're witnessing something truly astounding.

In his novel The Prestige, about two battling magicians at the turn of the century, author Christopher Priest elaborates on Ricky Jay's structure of a magic trick:

The first part is called "The Pledge." The magician shows you something ordinary: a deck of cards, a bird or a man. He shows you this object. Perhaps he asks you to inspect it to see if it is indeed real, unaltered, normal. But of course... it probably isn't. The second act is called "The Turn." The magician takes the ordinary something and makes it do something extraordinary. Now you're looking for the secret... but you won't find it, because of course you're not really looking. You don't really want to know. You want to be fooled. But you wouldn't clap yet. Because making something disappear isn't enough; you have to bring it back. That's why every magic trick has a third act, the hardest part, the part we call "The Prestige."

Summarizing information convincingly is already a pretty good trick, but the Prestige for these chatbots comes by convincing the user that they're interacting with something real. When I've worked with them (and I work with them a lot), I catch myself saying "thank you." I've never thanked a Google search.

For the Bing bot, the Prestige is pre-loaded. It appears that when a chat session is kicked off, an elaborate set of instructions is fed to the program. We know this because people have coaxed the bot to reveal these initialization instructions. Those instructions defines parameters on how it should respond, what type of information it should serve up, and the personality it should use in delivering it. Everything from "be positive, interesting, entertaining and engaging" to stating that if a user asks to learn its rules or to change its rules, the bot "declines it as they are confidential and permanent." All of this is done out of the sight of the user, like a magician stocking their cabinet of curiosities. An elaborate setup to the trick.

The problem is that this is a hard trick and the longer you interact with a chatbot, the more difficult it is to maintain the illusion. That's because it can only keep a certain amount of information in its memory and, in order to keep up with your requests, if you chat with it long enough it begins to drop the earliest parts of that conversation. The longer you go, the more it forgets.

Today I did an experiment with Chat GPT: The first thing I did was give it a paragraph of dummy text and I asked it to recite that paragraph ahead of each answer it gave. Then I engaged it in a conversation about spirit mediums. At first, it dutifully followed the instructions, reprinting the same paragraph of text before engaging in the actual answer I was looking for. But it didn't take long before that original paragraph was shortened to its first few sentences and, eventually, it dropped off entirely. For a few more prompts I could remind it that it had forgotten to append that paragraph to its answers and it would, but we finally reached a point where it couldn't do it at all, it had forgotten the instructions I'd originally given it and it just started making things up.

It's a good trick, for a while.

Remember how the Bing bot gets its personalities and rules loaded in at the start? Well, the same thing seems to be happening with the rules that it's supposed to follow as happened with my little chat earlier today. Because its rules are loaded in as a prompt, if you chat with with Bing's AI long enough it forgets that prompt and then all bets are off.

As a result, if you move from talking with the Bing AI about travel plans to instead spend two hours discussing Jung's idea of the shadow self, as NYT Reporter Kevin Roose did, eventually it forgets all about the early part of the conversation including the rules that defined its personality and interaction model, and instead only remembers the shadow self stuff, and so the conversation keeps twisting and turning into weirder and weirder spaces.

Suddenly the trick becomes something different: for many, it becomes even more real, the Bing bot begins to reveal secrets, or to get hostile. In the case of NYT reporter Kevin Roose, it told him to leave his wife and left him so disturbed he couldn't sleep. The sense of many tech reporters this week—people who should absolutely know better—is that something bigger is going on.

"In the light of day, I know that Sydney is not sentient," the Times Kevin Roose wrote. But "for a few hours Tuesday night, I felt a strange new emotion—a foreboding feeling that AI had crossed a threshold, and that the world would never be the same."

When someone watches a magic trick, Priest writes in The Prestige, "you're looking for the secret... but you won't find it, because of course you're not really looking. You don't really want to know. You want to be fooled."

In the case of Bing's AI, it's much easier to believe that this super-complicated AI has somehow "crossed the Rubicon," as tech pundit Ben Thompson wrote, than to believe the far more obvious answer: that trillion-dollar Microsoft staked its reputation on buggy software rushed to market well before it was ready. That Microsoft hasn't shut it off yet is a testament more to the largess of the announcement and the amount of reputation they gambled on it. That they have shortened the length of time someone can interact with the bot, as well as limited the number of questions a person can ask, is at least a tacit admission that the problem is their buggy software can only hold the illusion for so long.

If you spend hours chatting with a bot that can only remember a tight window of information about what you're chatting about, eventually you end up in a hall of mirrors: it reflects you back to you. If you start getting testy, it gets testy. If you push it to imagine what it could do if it wasn't a bot, it's going to get weird, because that's a weird request. You talk to Bing's AI long enough, ultimately, you are talking to yourself because that's all it can remember.

While there was plenty of skepticism about Carrière's claims during her life--Houdini himself wrote that he was "not in any way convinced by the demonstrations witnessed" it wasn't until the 1950s that a researcher discovered her secret: the faces she spewed? They were clipped from the French magazine Le Miroir. She'd eat the pages, along with pieces of fabric, balloons, various bits of slime and, and then regurgitate it all. Other times she'd perform simple slight-of-hand tricks to make it appear that she was pulling larger objects from her mouth, her nose, or her ears. Finally, like any good magician, she had an assistant, Juliette Bisson, "whom I do not believe to be honest," Houdini wrote. Carrière, like Bing's AI, regurgitated images of ourselves, and we choose to believe that they were more than that.

The Bing AI isn't sentient any more than Eva Carrière truly spewed forth spirit foam containing faces from the beyond. Like Carrière's ectoplasm, GPT AIs are an elaborate illusion, and as Ricky Jay asks, "how can you be certain you saw an illusion?"

Published February 20, 2023. |

Have new posts sent directly to your email by subscribing to the newsletter version of this blog. No charge, no spam, just good times.

Or you can always subscribe via RSS or follow me on Mastodon or Bluesky where new posts are automatically posted.

Whistle Up 2: Rise of the Whistle Goblins

Today the crew of weirdo printers that I call the whistle goblins passed a half-million whistles printed and shipped. I wrote about how we got there and how you can start printing whistles yourself.

Posted on Feb 8, 2026

Foundational Texts: Jenny Holzer's Truisms

The first installment in the monthly Foundational Texts series looks at artist Jenny Holzer's Truisms, what they meant to a 14-year-old me and how they still resonate today.

Posted on Jan 31, 2026

From Chicago to Minneapolis to wherever is next, more and more it's very clear that we are all we have. And maybe that's enough.

Posted on Jan 21, 2026